AI makes perfect liars. Your loneliness makes perfect victims

Interview with Karen Spinner — the self-taught developer who’s been dodging AI-powered romance scammers since day one.

This article continues my series on the explicit and hidden risks women face daily as fintech users.

After my recent story “When love costs €14,000”, I was flooded with messages from women across Europe. Too many of these stories were identical: the same patterns, the same emotional hooks, the same financial damage.

That’s why I sat down with Karen Spinner, a writer, agency owner, and occasional developer who’s been an early adopter of AI and an unwilling early adopter of the new wave of AI-assisted romance scammers.

A Disclaimer from Karen

“I’m not an expert in cyber-security; I’m just a self-taught software developer and early adopter of AI who has ignored, blocked, and deleted numerous messages from a wide range of sketchy characters. In other words, I’m a typical woman on social media.” 😂

1. Technical Perspectives & Emerging Vectors of Manipulation

Mila: As someone who builds and experiments with AI tools, when you hear about real-world cases like Sophie from Amsterdam (a marketing consultant who lost €17,300 via a fintech app to a romance scammer) which AI-enabled capabilities stand out to you as decisive factors?

Karen: I don’t think this is primarily a tech problem at all. We’re all spending too much time online, trapped in our algorithmic bubbles. The dating apps are a horror show (so glad I’m married) and it’s harder for adults to make real friends as well as romantic connections. So, people are lonely and more likely to engage uncritically with a seemingly friendly online presence. And they don’t always have close friends who can tell them that guy or gal they just met online is acting suspiciously.

Yes, AI makes it easier for scammers for romance scammers to build fake identities, write persuasive messages, and operate at scale.

AI is brilliant at phishing emails, but they’re mostly preying on people’s willingness to believe, not their lack of tech savvy.

2. Targeting Women at Scale: AI Profiling & Automation

Mila: Your StackDigest project focused on semantic search and clustering. How might similar techniques be repurposed by romance scammers to target women aged 25–42 who are recently separated and financially stable?

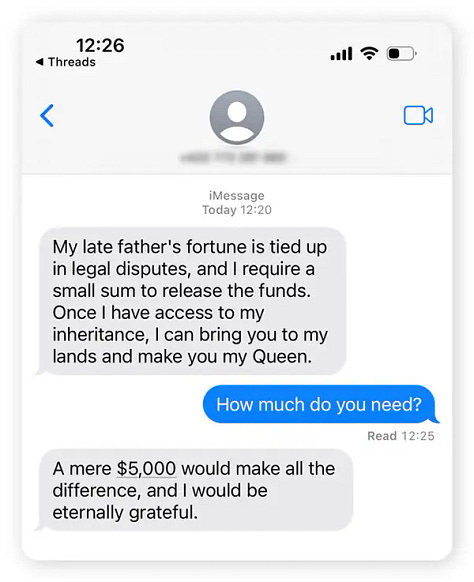

Karen: They can use the same techniques that marketers use, but, you know, for evil.

They can purchase access to names with demographic and contact info from one of many data brokers, set up a database, and enrich it with details from publicly available sources like comments on social media. Then they can develop an “ideal victim profile” based on who’s fallen for their scams in the past, create multidimensional embeddings for their records, and use machine learning to map the mathematical distance between the ICV and each contact. Contacts with the greatest similarity scores can be prioritized.

Scammers are basically BDRs who are pitching fake love and companionship. It’s an offering that, if you believe in it, is a lot more appealing than most SaaS products.

“Scammers are basically BDRs who are pitching fake love and companionship.”

— Karen

3. Criminal Models, Open-Source LLMs & the New Accessibility of Abuse

Mila: Tools like WormGPT, FraudGPT, and LoveGPT appear in threat-intelligence reporting. How accessible is AI-driven malicious tooling to non-technical actors?

Karen: Sure, AI generated content is everywhere, including (especially?) in scammy messages. And if scammers are cold emailing and DMing women, they’re in the same boat as everyone else doing direct outreach right now. As it’s gotten easier for sellers to send emails and messages at scale, inboxes have gotten crowded, open rates go down, and sellers start sending even more messages to maintain their income. It’s a vicious cycle.

Also, scammers don’t need to purchase a specially tuned criminal platform. They can just download one of the many thousands of open source models available on Hugging Face. If they’re willing to do a little extra work, they could easily set it up to work with a database and churn out hyper-personalized messages. Or they could fine-tune it based on their past messages to potential victims.

4. Operating at Scale: Managing 40–50 Women at Once

Mila: Reports confirm scammers can manage 40–50 simultaneous conversations through scripts and automation. What infrastructure makes this possible?

Karen: Honestly, they could do this with the same tools that small businesses are using to automate outreach. They could use tools like ManyChat and n8n. Or, if they didn’t want to run criminal activity on a third-party platform that might report them, they could use a GPT or even Claude Code to help them clone existing tools and run them locally. And the wealth of open source solutions mean you can set up whatever kind of malicious infrastructure you want while (mostly) avoiding the need for APIs.

5. Deepfakes: The State of the Technology

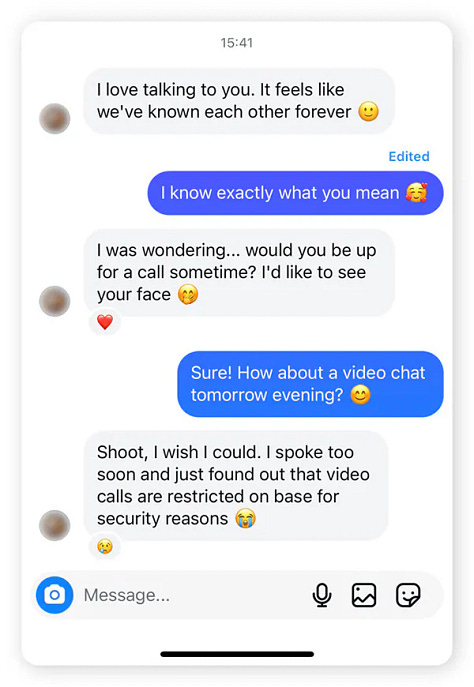

Mila: Many victims report scammers refusing live video calls. We now see cases where scammers use deepfaked video calls. What limitations still exist?

Karen: Deepfake video and audio is really good, and it’s getting better. But live-responding deepfake video is still pretty new. Like those AI avatars that companies are using for HR screening, interactive deepfakes will still have some imperfections, like odd visual inconsistencies, slow response times, scripted-sounding conversation, etc. Also, while I’ve done a little research into this, I’m not an expert!

6. What Still Works: Behaviour-Based Verification

Mila: With deepfake-enabled deception rising, what can ordinary users still rely on?

Karen: You could try asking the person in the video to do something random and unpredictable, like pat their head or hold up three fingers. Basically, anything that might cause the AI to go “off script.” There is also the Reality Defender Deepfake Detection App for Zoom, although I haven’t actually tested it myself.

“AI isn’t the danger. Your emotional autopilot is.”

— Mila Agius

Mila’s Conclusions: The Real Fuel Behind Modern Romance Scams

After years of studying these cases, I am convinced of one thing:

The root cause of modern romance scams is not AI. It is loneliness.

AI simply turbocharges operations that were already thriving.

Across multiple surveys and media summaries:

The United States appears among the world’s loneliest nations, particularly among middle-aged adults.

About 30% of U.S. adults report weekly loneliness.

In the UK, nearly half of surveyed adults say they’ve become more lonely in recent years.

In Europe, loneliness rates are notably higher in southern and eastern countries, and lower in northern and western ones.

Loneliness pushes women into predictable emotional patterns — into what I call “autopilot mode.” This is exactly the psychological posture scammers rely on.

But here’s the part that matters:

Awareness changes everything.

When women:

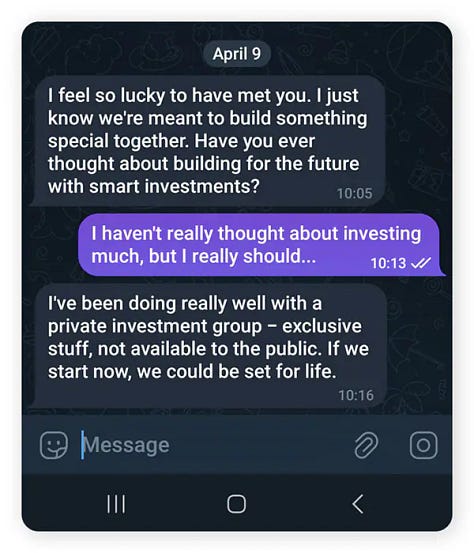

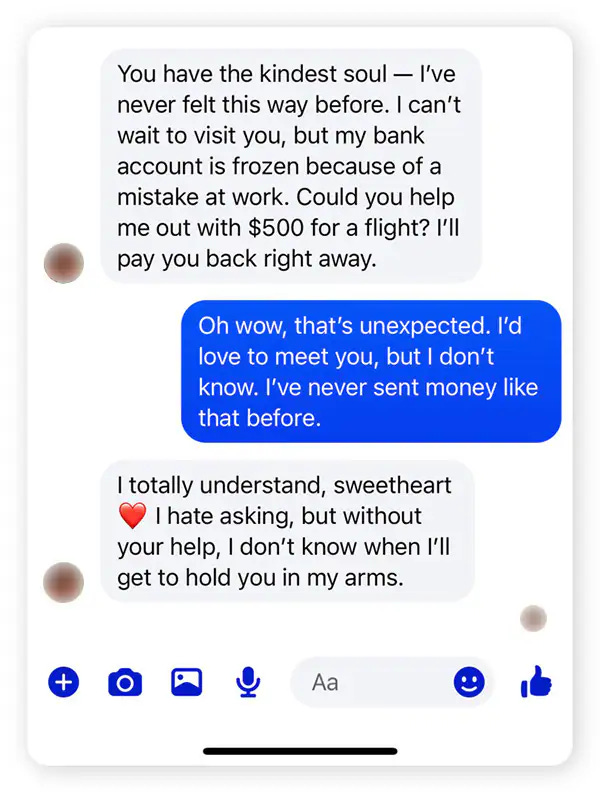

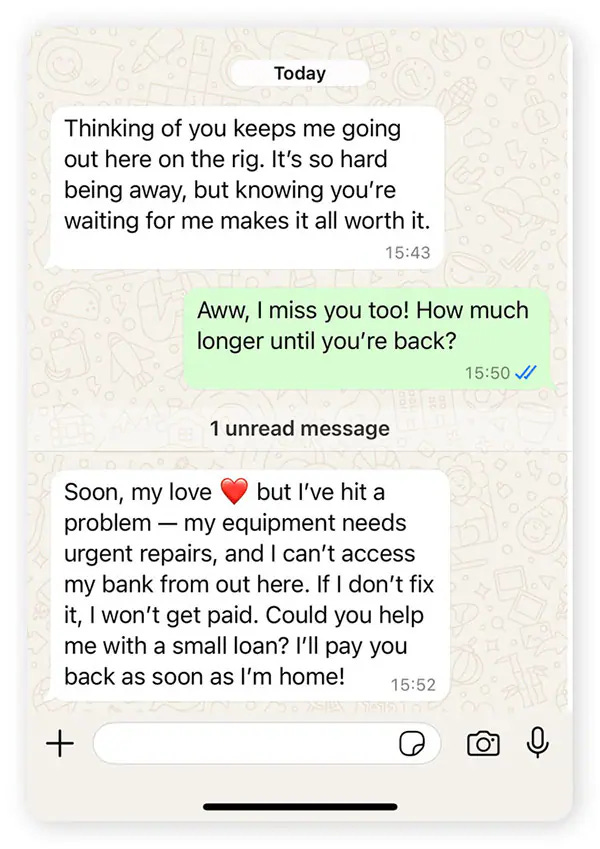

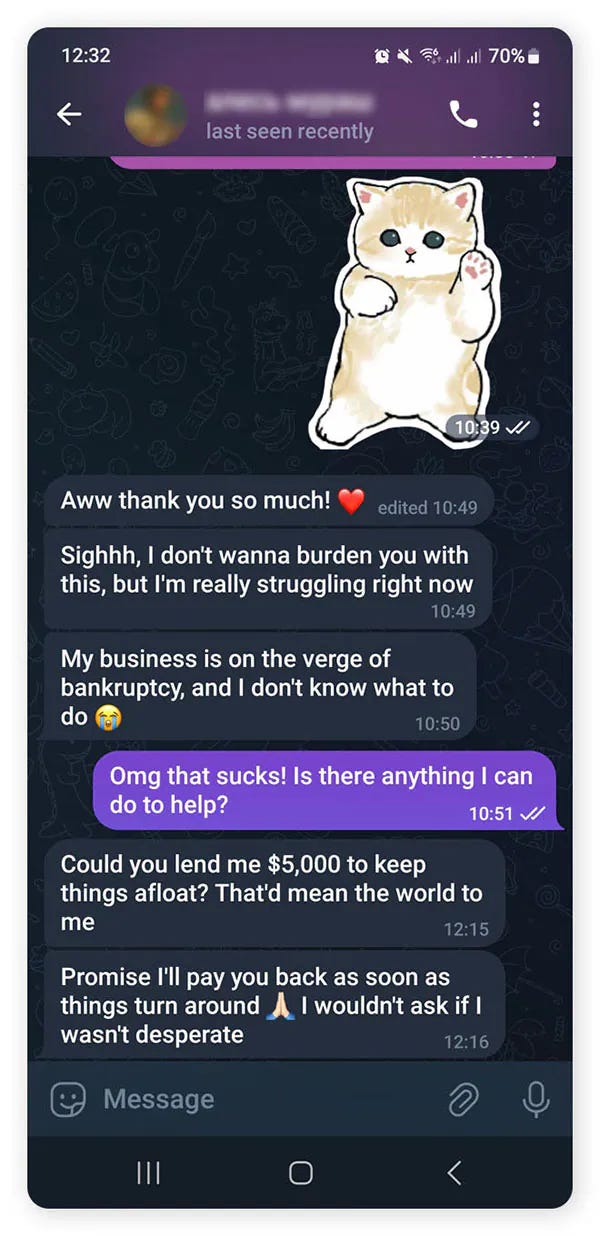

understand the behavioural scripts

recognise emotional triggers

know the red flags

and speak openly about loneliness instead of hiding it

…the entire power dynamic shifts.

No woman chooses to become a victim.

No woman wants to feel foolish, manipulated, or reduced to a statistic on a spreadsheet of criminal revenue!

Knowledge is not just power.. in this context, it is protection.

Coming Next: Part II

In the next article, we will examine:

the psychological vulnerabilities scammers exploit

behavioural patterns that reveal deception

how “human-in-the-loop” systems could make online platforms dramatically safer

what practical defences modern fintech users can adopt

Stay with us: the fight against modern fraud begins with understanding how human and machine behaviours intersect!